Large documents in redis: is it worth compressing them (Part 1)

At Forest Admin, we build admin panels for which we need to compute and cache large JSON documents. These documents are stored in redis and retrieved from this storage in order to be as fast as possible.

🐘 The problem with large JSON documents: latency

Some of these JSON documents can weigh more than 20 MB. Storing and retrieving such a large document introduce latency in our services, as the data needs to be uploaded or downloaded through the network.

So, every time we need to retrieve these documents from the server, we download 20 MB (in worst cases) from redis and parse the received JSON.

Looking at performance logs in production, I could see that this operation of storing or retrieving these documents cost us some significant amount of time.

I wanted to test if were worth uploading compressed JSON documents instead of plain JSON content in redis.

That’s why I created a repository on github with some code to run benchmarks.

🕗 Protocol

I want to evaluate the total time of pushing a document and retrieving it from redis, with 5 different implementations:

none:JSON.stringify()+ push the result to redisbrotli:JSON.stringify()+brotli+ push the buffer to redisdeflate:JSON.stringify()+deflate+ push the buffer to redis-

gzip:JSON.stringify()+gzip+ push the buffer to redis msgpack:msgpack.pack()+ push the buffer to redis

Each compression algorithm will be evaluated with every possible compression level:

brotli: 1 to 11deflate: -1 to 9gzip: -1 to 9

JSON documents will be retrieved from a redis server, based on a key pattern. For my own test, I will use actual JSON documents of various sizes that we handle in production.

The benchmark is run against a redis server, and in my case, it’ll be a local server running in docker.

Finally, these tests are run on my MacBook Pro 2,8 GHz Intel Core i7, on node 14.

📈 Results

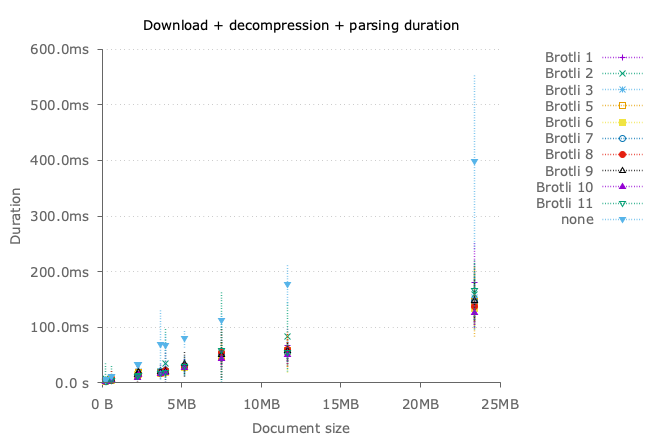

Brotli compression

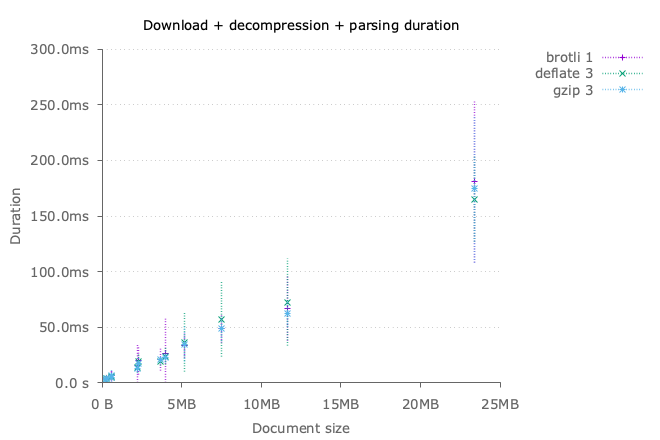

We can see that a compressed document with brotli will always be faster to retrieve, and decompress than a large uncompressed document.

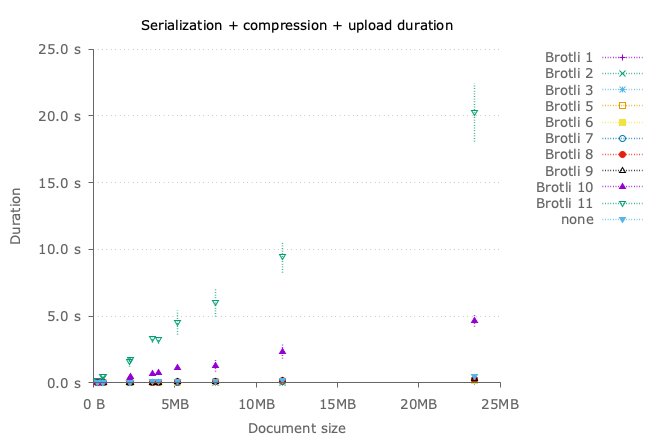

These first results show that ⚠ brotli is very slow during compression, when used with quality levels of 10 and 11. That’s something worth noting as 11 is the default value in node 14.

Using brotli with its default value is actually slower than not doing anything for document upload.

I plotted another graph without these 2 values for brotli compression, to be able to compare quality levels.

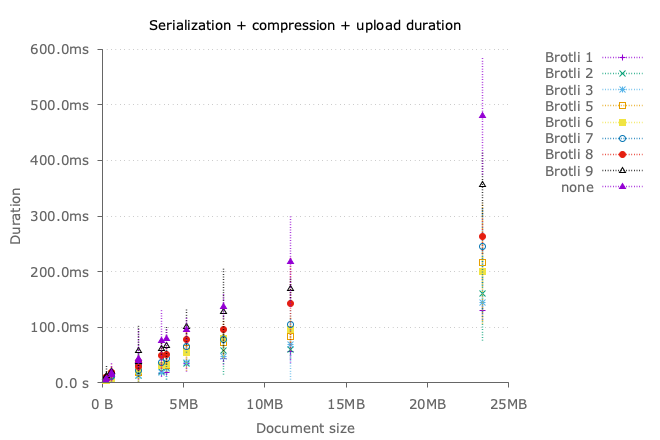

With this 3rd plot, we can see that any other quality level allows having better upload time of our documents to redis.

We can also see that the minimal compression level has the best possible performance in the conditions of my experiments. It might change for instance with a remote redis server.

To summarize

- Download:

- 🏅 brotli-11 for download performance

- ✅ every compression level has better performance

- Upload:

- 🏅 brotli-1 for upload performance

- ✅ compression levels up to 9 are faster than no compression

- ❌ brotli-10 and brotli-11 are slower than no compression

- Potential winner:

- 🏅 brotli-1

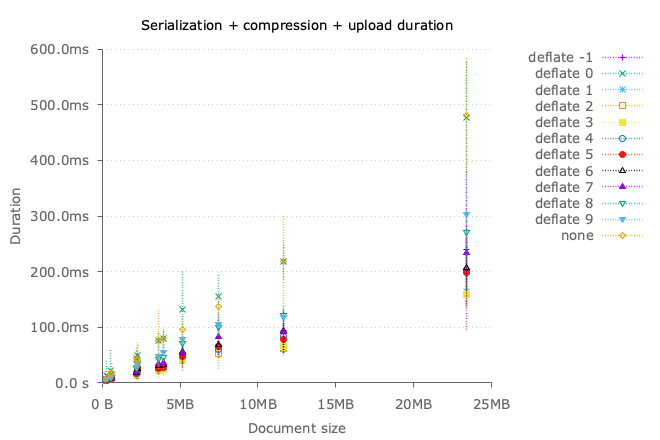

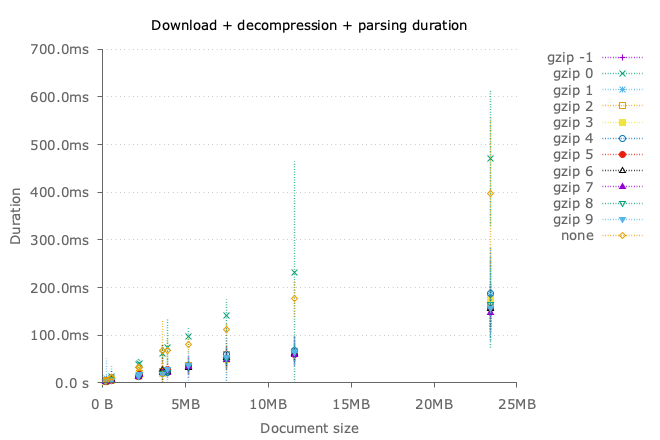

Deflate compression

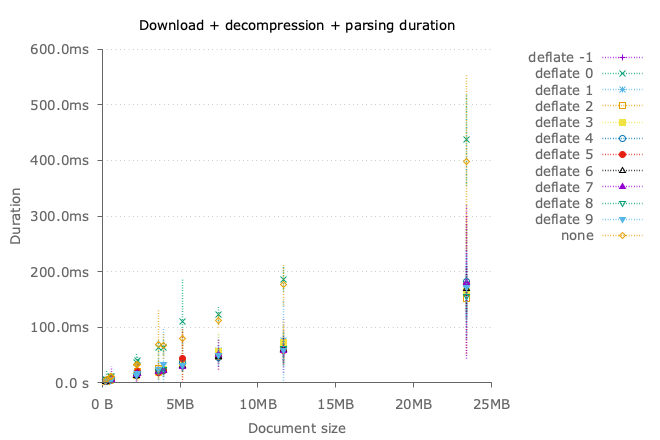

Same results here for deflate than brotli: it’s always faster to retrieve compressed documents from redis when they are compressed.

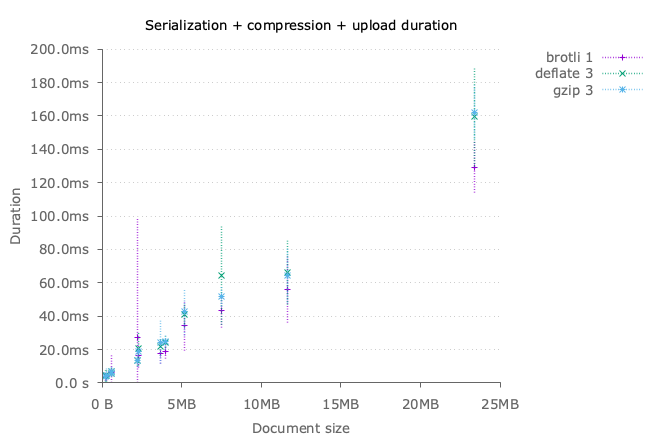

On the other hand, it’s also always faster to upload compressed documents to redis with deflate, whatever the compression level we choose.

We can see that deflate 3 seems to be the faster when used to upload documents.

To summarize

- Download:

- ✅ Results are very similar across compression levels, and we cannot spot a clear winner from the results

- Upload:

- 🏅 deflate-3 seems to be slightly faster than other compression levels

- ✅ all compression levels are faster to use than nothing at all

- Potential winner:

- 🏅 deflate-3

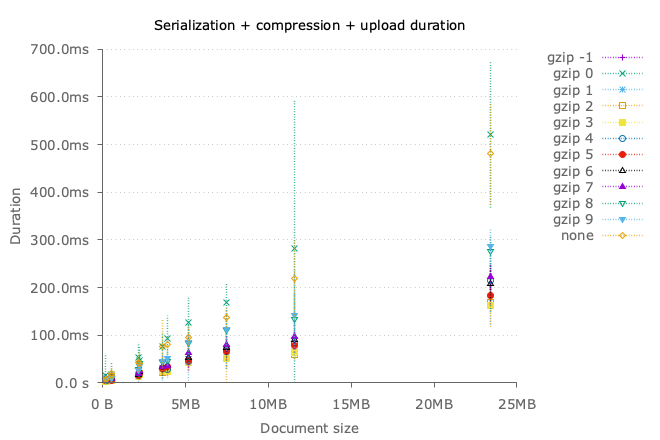

Gzip compression

With gzip also, it’s always faster to retrieve compressed documents from redis than uncompressed documents.

Using gzip to upload documents is also always faster, but we can see a clear difference between stronger compression levels (8 & 9) that seem a little slower.

To summarize

- Download:

- ✅ Results are very similar across compression levels, and we cannot spot a clear winner from the results

- Upload:

- 🏅 gzip-3 seems to be slightly faster than other compression levels

- ✅ all compression levels are faster to use than nothing at all

- Potential winner:

- 🏅 gzip-3

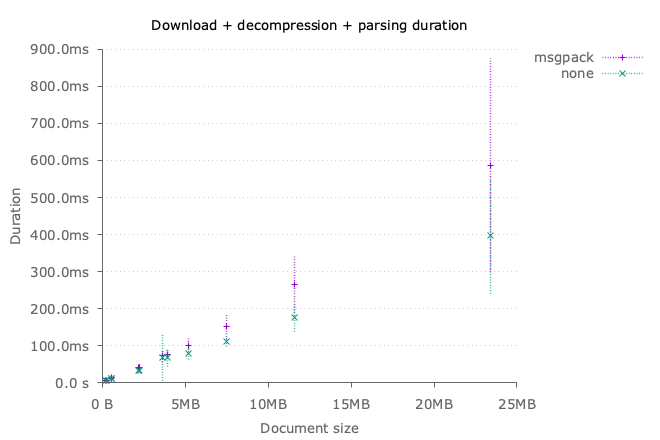

msgpack

The library msgpack allows serializing JavaScript objects in a smaller form that the standard JSON.stringify. It is documented as slower than JSON, but I wanted to measure if the time to serialize was compensated by a faster transfer.

It also has the advantage to transform objects into a buffer in one operation, in contrary to the solution using a compression algorithm:

- msgpack transforms an object into a buffer

- solutions with compression are requiring 2 steps: one for transformation to JSON, another for the compression of the resulting string.

Results are not good for msgpack in my local environment: it seems always slower to use msgpack than just sending plain JSON documents to my redis server.

⚠ This library can still produce interesting results when the network gets slower. In my situation, it cannot be faster than solutions based on compression, because the compression level obtained with msgpack (around 60%) is a lot less interesting than the compression obtained with compression algorithms (more than 90%).

Upload results are not good either, for msgpack.

To summarize

❌ msgpack is slower than the original implementation both for upload & download

Final match: brotli-1 vs deflate-3 vs gzip-3

Results here are very similar between compression algorithms.

Regarding upload time, brotli-1 seems to have a clear advantage over other algorithms.

And the winner is: 🏅 brotli-1

Next step: validate these results on a solution that is closer to the production:

- on an instance that has similar performance to production instances

- use a remote redis instance, similar to the one used in production.

These results will be shared in a part 2 of this series!

Leave a Comment

Your email address will not be published. Required fields are marked *